Getting honest feedback from customers should be simple. But I’ve seen countless businesses waste this opportunity by asking the wrong questions.

Research analyzing a large sample set found that the average survey response rate is 44.1%. Bad survey questions make this number even worse. When your questions confuse people or push them toward certain answers, you’re not collecting real feedback. You’re just wasting everyone’s time.

That’s why I’m here to help. In this article, I’ll show you the 8 most common types of bad survey questions that kill your data quality. More importantly, I’ll teach you exactly how to fix each one so you can start collecting feedback that actually helps your business grow.

Let’s get started!

Table of Contents:

- What Are Bad Survey Questions?

- Why Bad Survey Questions Matter

- 8 Types of Bad Survey Questions (With Examples)

- How to Write Better Survey Questions

- The Best Tool for Creating Better Survey Questions

- FAQs About Bad Survey Questions

What Are Bad Survey Questions?

A bad survey question is any question that prevents people from giving honest, objective answers.

These questions use biased language, make unfair assumptions, or confuse respondents. They might seem innocent at first. But they seriously damage the quality of your survey data.

Think of it this way: if your question pushes people toward a specific answer, you’re not really asking. You’re telling them what to say.

Survey question bias occurs when questions are phrased or formatted in ways that skew respondents toward certain answers, or when questions are hard to understand.

This makes it difficult for customers to answer honestly, preventing them from sharing their true thoughts and experiences.

Why Bad Survey Questions Matter

Bad survey questions don’t just give you wrong answers. They create bigger problems for your entire business.

Here’s what happens when your surveys are full of poorly written questions:

- Survey fatigue sets in. When people struggle to answer confusing questions, they lose interest fast. They’ll either abandon your survey or rush through it without reading carefully.

- Your response rates drop. When people encounter confusing or biased questions, they abandon surveys or rush through without reading carefully. Bad questions make this number even worse because frustrated customers simply won’t participate.

- You make bad business decisions. When your data is inaccurate, every decision based on that data will be wrong. You might invest in features nobody wants or ignore real problems your customers face.

To put it simply, the cost of bad survey questions isn’t just about wasted time. It’s about missed opportunities to truly understand and serve your customers better.

8 Types of Bad Survey Questions (With Examples)

Now let’s look at the specific types of bad survey questions you need to avoid. I’ll show you real examples and explain exactly how to fix each one.

1. Leading Questions

Leading questions use biased language that pushes people toward a specific answer.

These questions include subjective words or opinions that influence how people respond. Instead of letting customers share their honest views, you’re basically putting words in their mouths.

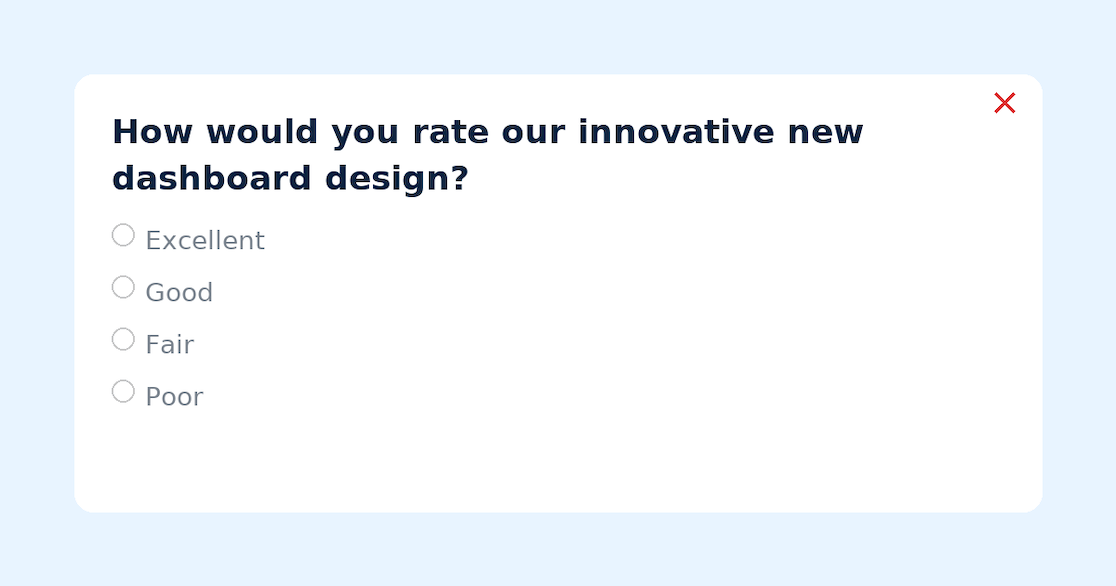

Here’s an example:

Bad: “How would you rate our innovative new dashboard design?”

The word “innovative” already tells people this is supposed to be special. It makes them feel like they should rate it highly, even if they found it confusing.

How to fix it:

Remove all subjective adjectives and context. Ask neutral questions that show genuine curiosity:

Better: “How would you rate the new dashboard design?”

See the difference? The fixed version doesn’t assume anything. It simply asks for an honest rating.

Another example:

Bad: “How disappointed were you with the delayed shipment?”

This assumes they WERE disappointed, which might not be true.

Better: “How did you feel about your shipment timeline?”

The key is to let customers guide their own responses without any pressure from how you phrase the question.

2. Loaded Questions

Loaded questions contain built-in assumptions about your customers.

These questions assume certain facts about the person answering, even though you never asked about those facts. This forces people into uncomfortable positions where any answer feels wrong.

Here’s what I mean:

Bad: “How many times per week do you use our mobile app?”

This assumes the person uses the app weekly. What if they only use it monthly? Or never? There’s no good way for them to answer honestly.

Bad: “What’s your favorite feature in the premium tier?”

This assumes they’re on the premium tier. If they’re not, they can’t answer accurately.

How to fix it:

Never make assumptions. Ask qualifying questions first, or provide an “opt-out” option:

Better approach: First ask, “Do you currently use our mobile app?” Then, if they say yes, ask “How frequently do you typically use it?”

You can also add answer options like “I don’t use the mobile app” or “Not applicable” to give people an honest way out.

The rule is simple: only work with information people actually give you, nothing more.

3. Questions with Jargon

Jargon means using technical terms or industry slang that your average customer won’t understand.

What’s obvious to you might be completely foreign to them. When people don’t understand your question, they’ll either guess at what you mean or just skip it entirely.

Examples of jargon problems:

Bad: “How would you rate our API response latency?”

Unless you’re surveying developers, most customers have no idea what API latency means. They might think you’re asking about shipping delays or website speed.

Bad: “Did our solution improve your CAC by reducing funnel friction?”

Customer Acquisition Cost and funnel friction are marketing terms. Regular users won’t understand this.

How to fix it:

Use plain, simple language that anyone can understand:

Better: “How quickly does our software respond when you use it?”

Better: “Did our product help you attract more customers?”

Always assume your customer has zero knowledge of your internal terms or industry buzzwords. Write for a 7th-grader, and you’ll get much better responses.

4. Absolute Questions

Absolute questions use words like “always,” “never,” “all,” or “every” that force people into extreme positions.

Real life rarely works in absolutes. When you ask someone if they “always” do something, you’re setting them up to answer dishonestly because even one exception makes the answer “no.”

Examples:

Bad: “Do you always read our monthly newsletter?”

Most people will answer “no” even if they read it regularly, because they might have skipped one issue. You lose valuable data about frequent readers.

Bad: “Do you never experience problems with our software?”

The word “never” is too rigid. Someone might have had one minor issue six months ago, forcing them to say yes even though they’re generally satisfied.

How to fix it:

Replace absolutes with the Likert scale, which gives people a range of options.

Frequency scales or realistic options are much better than absolutes.

Better: “How often do you read our monthly newsletter?” → Always / Usually / Sometimes / Rarely / Never

Better: “How often do you experience problems with our software?” → Daily / Weekly / Monthly / Rarely / Never

This gives you much more useful data about actual behavior patterns instead of forcing people into all-or-nothing answers.

5. Double-Barreled Questions

Double-barreled questions ask about two different things at once but expect only one answer.

You can spot these easily because they usually contain the words “and” or “or.” They’re tempting because you think you’re being efficient. But really, you’re just confusing people.

Here’s an example:

Bad: “How satisfied are you with our shipping speed and packaging quality?”

Which one should people rate? If shipping was fast but the box arrived damaged (or vice versa), how can they answer accurately? They can’t.

Bad: “Was the registration process simple and did you complete it successfully?”

Again, these are two completely separate questions that need separate answers. Someone might find it simple but still abandon it for other reasons.

How to fix it:

Split every double-barreled question into two distinct questions:

Better:

- “How satisfied are you with our shipping speed?”

- “How satisfied are you with our packaging quality?”

Now you get clear, actionable data on each topic. You’ll know exactly what needs improvement and what’s working well.

Don’t try to save time by combining questions. You’ll just end up with useless data that confuses more than it clarifies.

6. Double Negatives

Double negatives use two negative words in the same sentence.

They’re grammatically confusing and force people to work too hard to understand what you’re actually asking. When someone has to re-read your question three times, that’s a sign of bad writing.

Examples:

Bad: “We should not avoid implementing this feature, agree or disagree?”

People have to pause and mentally untangle this. Does agreeing mean the feature should be implemented? It’s a mess.

Bad: “Our refund policy isn’t unclear, true or false?”

Too many negatives make this unnecessarily complicated. Is “true” good or bad here?

How to fix it:

Rewrite using positive or neutral phrasing:

Better: “We should implement this feature, agree or disagree?”

Better: “Our refund policy is clear, true or false?”

Look for words like “not,” “don’t,” “unless,” “barely,” and “scarcely.” If you see two of these in one sentence, you probably need to rewrite it.

7. Poor Answer Scales

Your answer options can be just as problematic as the questions themselves.

Poor answer scales don’t match the question, overlap in confusing ways, or fail to cover all reasonable responses. This forces people to choose answers that don’t truly reflect their views.

Common problems:

Mismatched scales:

Bad: “How often do you visit our website?” → Yes/No

A yes/no answer doesn’t tell you HOW OFTEN something happens. It’s completely wrong for this question.

Better: “How often do you visit our website?” → Daily / Weekly / Monthly / Rarely / Never

Overlapping options:

Bad: “What’s your age range?” → 20-30 | 30-40 | 40-50

What if someone is exactly 30 or 40? Do they choose both options? It’s unclear.

Better: 20-29 | 30-39 | 40-49 | 50+

💡 Pro Tip: Are you hoping to leverage surveys to understand your visitors better? See our guide to demographic survey questions, complete with examples!

Unbalanced scales:

Bad: “Rate our service:” → Poor | Acceptable | Good | Excellent | Outstanding

This scale has four positive options and only one negative. It’s heavily skewed.

Better: Very Poor | Poor | Average | Good | Excellent

How to fix it:

- Make sure your scale matches what you’re asking

- Use mutually exclusive options (no overlap)

- Balance your scale (equal positive and negative options)

- Include an “other” option when appropriate

Always test your answer options before launching the survey. If you’re confused about which option to choose, your customers will be too.

8. Random Questions

Random questions have nothing to do with your survey’s actual goal or the customer’s current experience.

These questions pop up out of nowhere and make people wonder why you’re even asking. They break the flow of your survey and often lead to abandoned responses.

Example:

Asking “How satisfied are you with our phone support?” when the person has only ever used email support makes zero sense. They don’t have a recent experience to reference, so any answer they give will be meaningless.

How to fix it:

Use segmentation to show the right questions to the right people:

- Only ask about features customers have actually used

- Time your surveys appropriately (right after an experience, not months later)

- Make sure every question connects to the survey’s stated purpose

If you tell someone the survey is about their recent purchase experience, every question should relate to that topic.

Don’t randomly throw in questions about features they’ve never tried or services they haven’t used.

The best surveys feel like natural conversations, not random interrogations.

How to Write Better Survey Questions

Now that you know what to avoid, let’s talk about what you should do instead.

- Ask personalized questions based on customer behavior. Research shows that sending surveys to a clearly defined and refined population positively impacts the response rate. Don’t send the same generic survey to everyone. Segment your audience and ask questions that match their specific experiences.

- Clearly outline your survey’s purpose upfront. Tell people why you’re asking and what you’ll do with their feedback. This builds trust and shows you value their time.

- Keep questions simple and straightforward. Use plain language. Short sentences. No jargon. If a 7th-grader couldn’t understand it, rewrite it.

- Ask one thing at a time. Remember the double-barreled problem? Break complex topics into multiple simple questions. It takes a bit more time but gives you much better data.

- Provide balanced answer options. Your scales should always have equal positive and negative options, with a neutral middle ground. Don’t make people choose between “good” and “amazing” when they might actually feel negative.

- Test your survey before launching it. Read every question out loud. Take the survey yourself. Better yet, have someone unfamiliar with your business take it. They’ll spot confusing questions immediately.

I’ve found that the best surveys are the ones that feel effortless to complete.

When customers can breeze through your questions without confusion or frustration, you’ll get honest feedback that actually helps your business grow.

The Best Tool for Creating Better Survey Questions

Now that you know how to avoid bad survey questions, you need a tool that makes it easy to create well-designed surveys.

After years of working with different survey platforms, I recommend UserFeedback as the best solution for WordPress users.

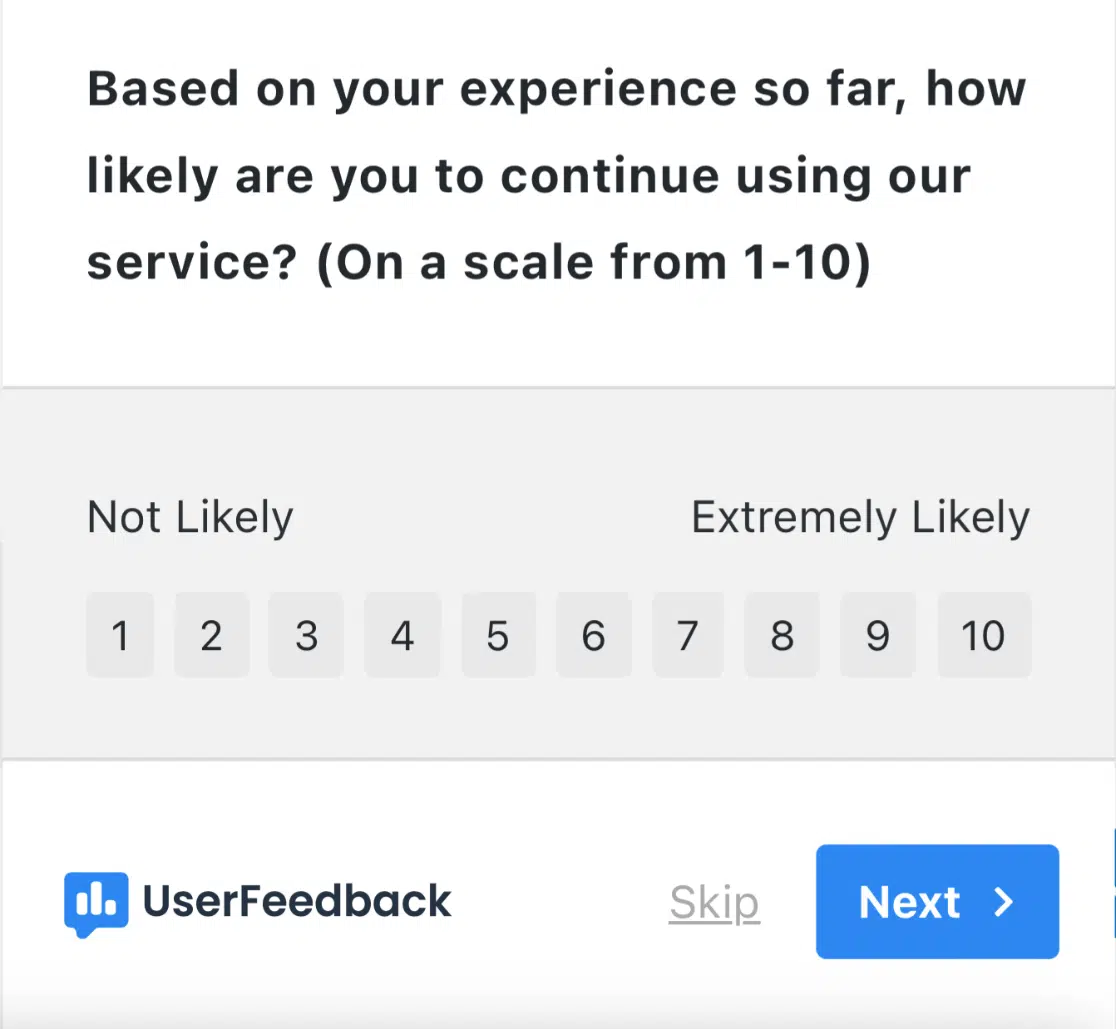

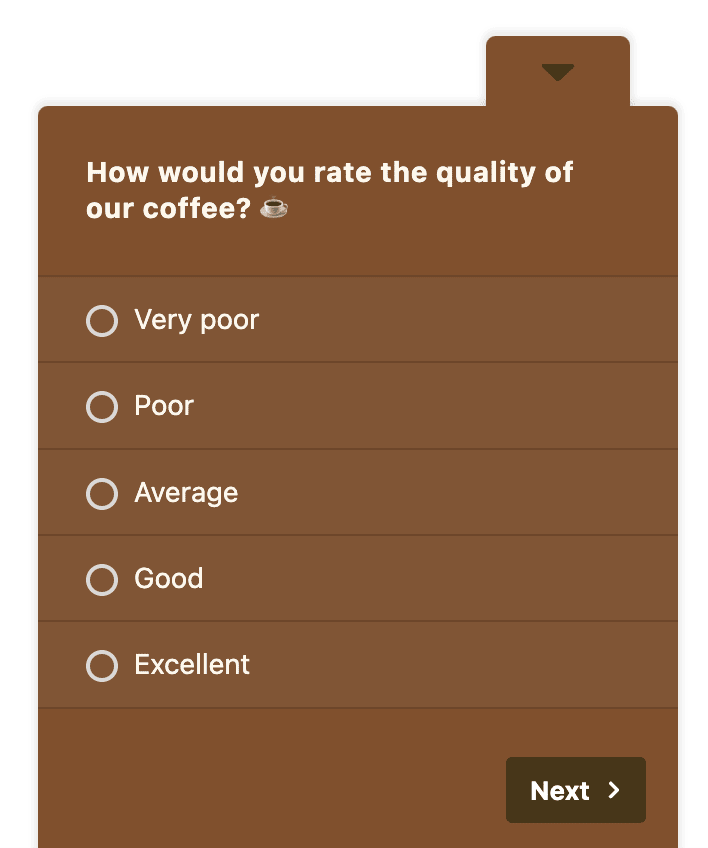

UserFeedback is the leading feedback plugin for WordPress, designed to help you create effective surveys without any coding skills. What I love most is how it prevents many of the common mistakes we’ve discussed in this guide.

Here’s why I recommend UserFeedback for your surveys:

- Pre-built survey templates that are already optimized with neutral, unbiased questions – including NPS, CSAT, and CES surveys that follow best practices

- Conditional logic that lets you ask follow-up questions based on previous answers, eliminating the need for loaded questions or assumptions

- Smart targeting so you can show the right questions to the right people, preventing random questions that don’t apply to specific users

- Multiple question types including rating scales, multiple choice, and open-ended questions, making it easy to match your answer options to each question

- Comment boxes that capture detailed feedback alongside ratings, giving you both quantitative and qualitative data

- Custom branding to make your surveys look professional and trustworthy, which increases response rates

- Mobile optimization that ensures all surveys display properly on any device, preventing formatting issues that confuse respondents

- Detailed reporting dashboard that automatically calculates important metrics like Net Promoter Scores

- AI feedback analysis that helps you summarize and analyze survey results with a single click

Plus, UserFeedback integrates directly with Google Analytics through MonsterInsights, giving you deeper insights into how your surveys perform and which questions get the best engagement.

The platform guides you away from common survey mistakes by providing tested templates and clear question formats. You won’t accidentally create double-barreled questions or confusing answer scales because the system is built to prevent these errors.

Get started with UserFeedback today and start collecting honest, actionable feedback with surveys that actually work!

And that’s it!

I hope you found this guide to avoiding bad survey questions helpful. If you liked it, I’d recommend you also check out:

- Ultimate Guide to Website Feedback

- 44 Customer Survey Questions + Guide to Meaningful Feedback

- 10 Online Survey Examples to Get You Started

- How to Calculate NPS Score (Net Promoter Score) + Examples

And don’t forget to follow us on X and Facebook to learn more about online surveys, customer feedback, and survey best practices.

FAQs About Bad Survey Questions

What makes a survey question bad?

A bad survey question uses biased language, makes assumptions, confuses respondents, or pushes people toward specific answers. It prevents you from collecting honest, objective feedback.

What’s the difference between leading and loaded questions?

Leading questions use biased language to push you toward a specific answer (“Don’t you love our amazing product?”). Loaded questions make assumptions about you that might not be true (“Where do you drink coffee?” assumes you drink coffee).

How can I identify double-barreled questions?

Look for the words “and” or “or” in your questions. If you’re asking about multiple topics but only allowing one answer, it’s double-barreled. Split it into separate questions.

Why should I avoid jargon in surveys?

Most people don’t understand industry terms or internal acronyms. If they can’t understand your question, they’ll either guess or skip it entirely. Both options give you worthless data.

What’s wrong with using double negatives in surveys?

Double negatives force people to work too hard to understand what you’re actually asking. Confused respondents give inaccurate answers or abandon your survey.

How do I create better answer scales?

Match your scale to the question type, use mutually exclusive options, balance positive and negative choices equally, and always include a neutral option. Test your scales before launching to make sure they make sense.